Video: Products over Progress - David Hussman ( https://www.youtube.com/watch?v=p7DeHPmu0nw )

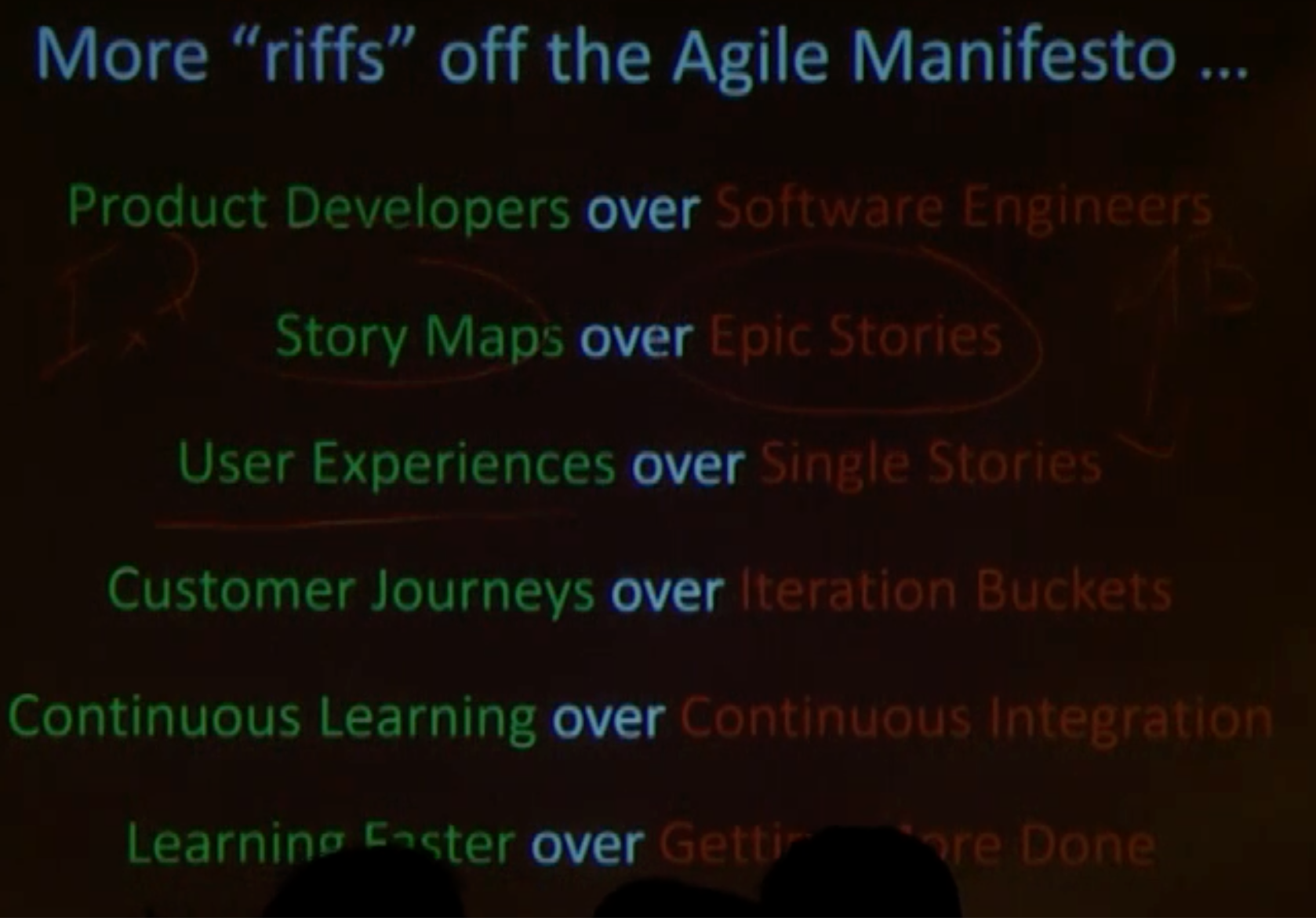

company: devjam rename ‘software engineer’ -> ‘product developers’

Who are we going to impact Who cares about what we’re doing NOT who are the users often non-users are impacted

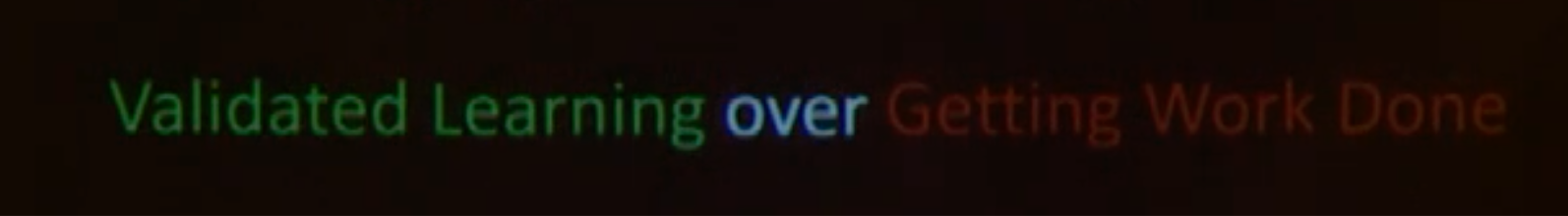

one of the mistakes: too focussed on construction, get things done

[ ] products over process - speaker

[ ] products over process - speaker

every time we we starting to deliver consistently, we needed to ask “are we doing the right things”

every time we we starting to deliver consistently, we needed to ask “are we doing the right things”

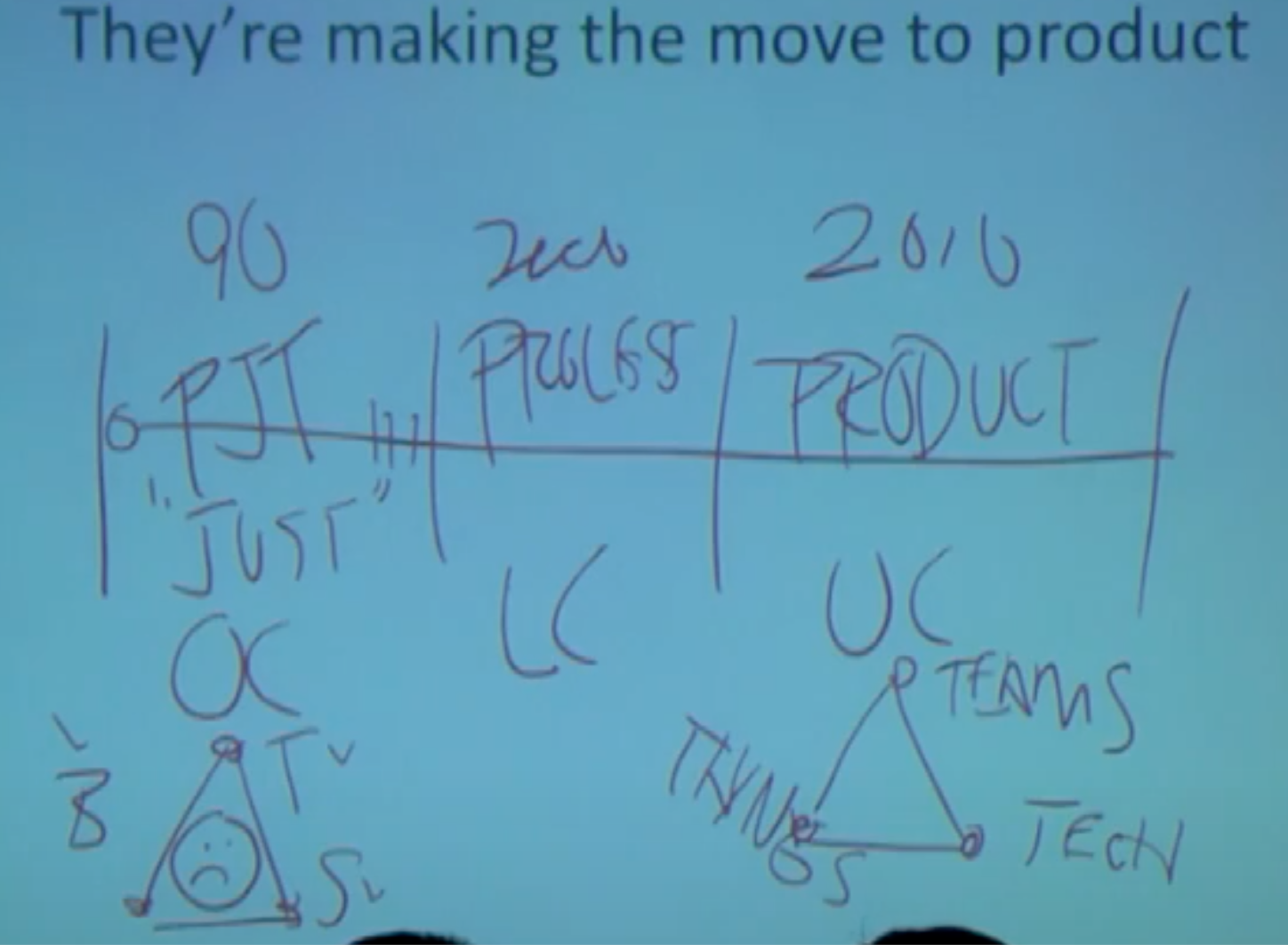

1990 project

just get things done

“if we’d get it done, we’d be succesful” (we didn’t get much done)

triangle: time - scope - budget

-> rewarded, even if we built the wrong thing

1990 project

just get things done

“if we’d get it done, we’d be succesful” (we didn’t get much done)

triangle: time - scope - budget

-> rewarded, even if we built the wrong thing

2000 process less certain, question things requirement -> story

2010 product very uncertain triangle: teams - tech - things(aka product) 1 team, 1 tech, 1 product -> all the agile books

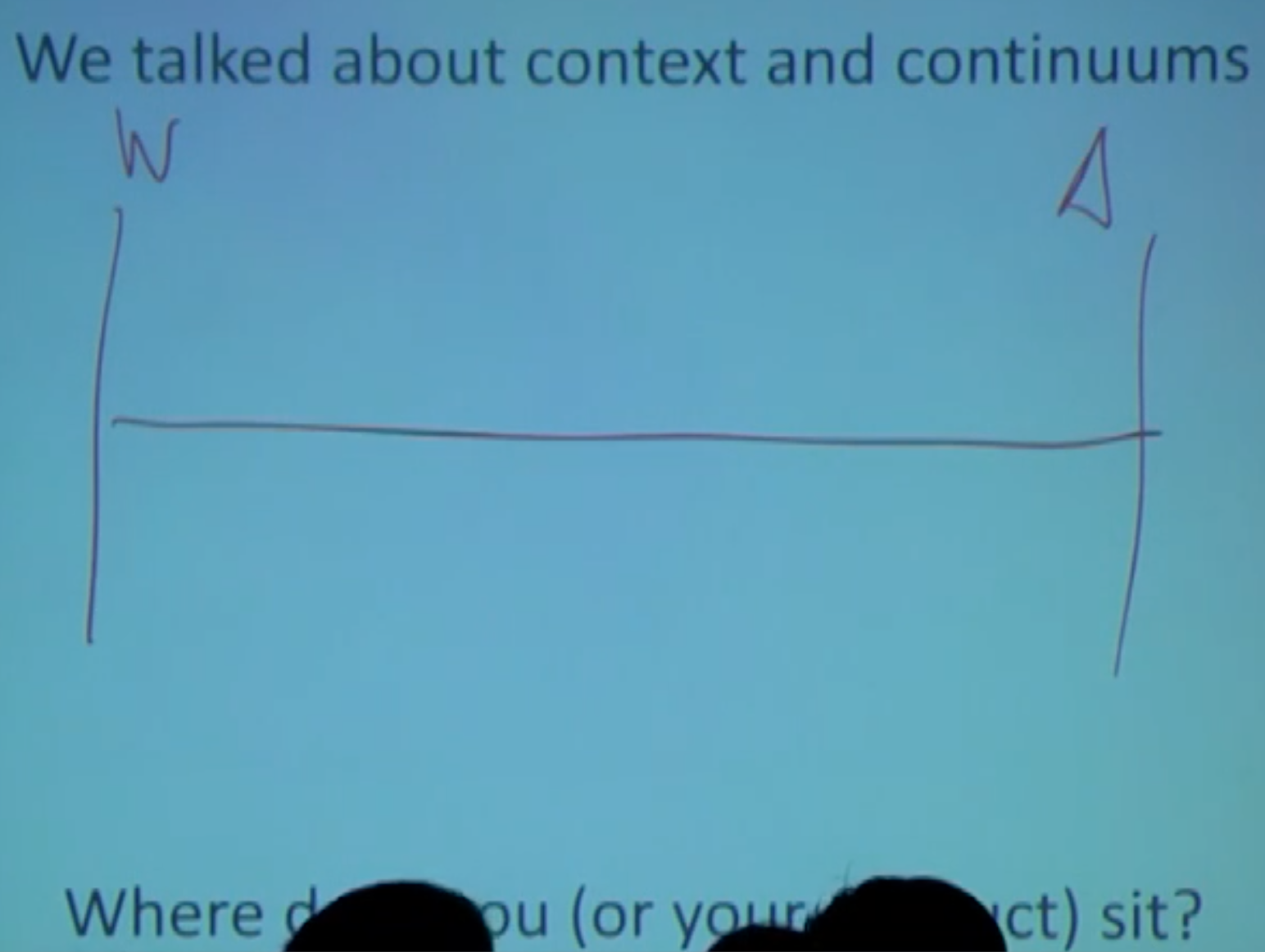

waterfall vs agile

more interesting:

known - unknown

certain - uncertain

static - dynamic

waterfall vs agile

more interesting:

known - unknown

certain - uncertain

static - dynamic

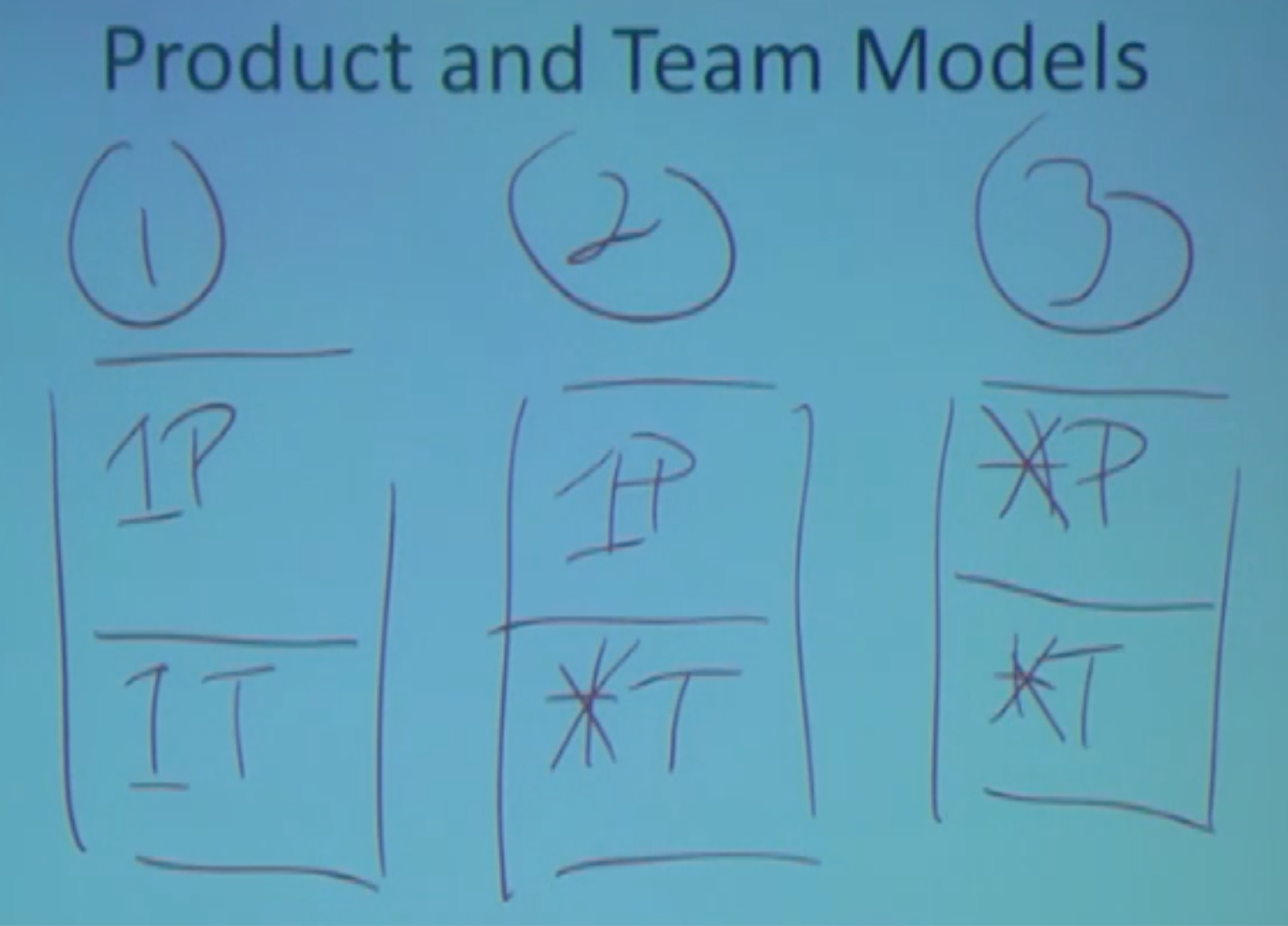

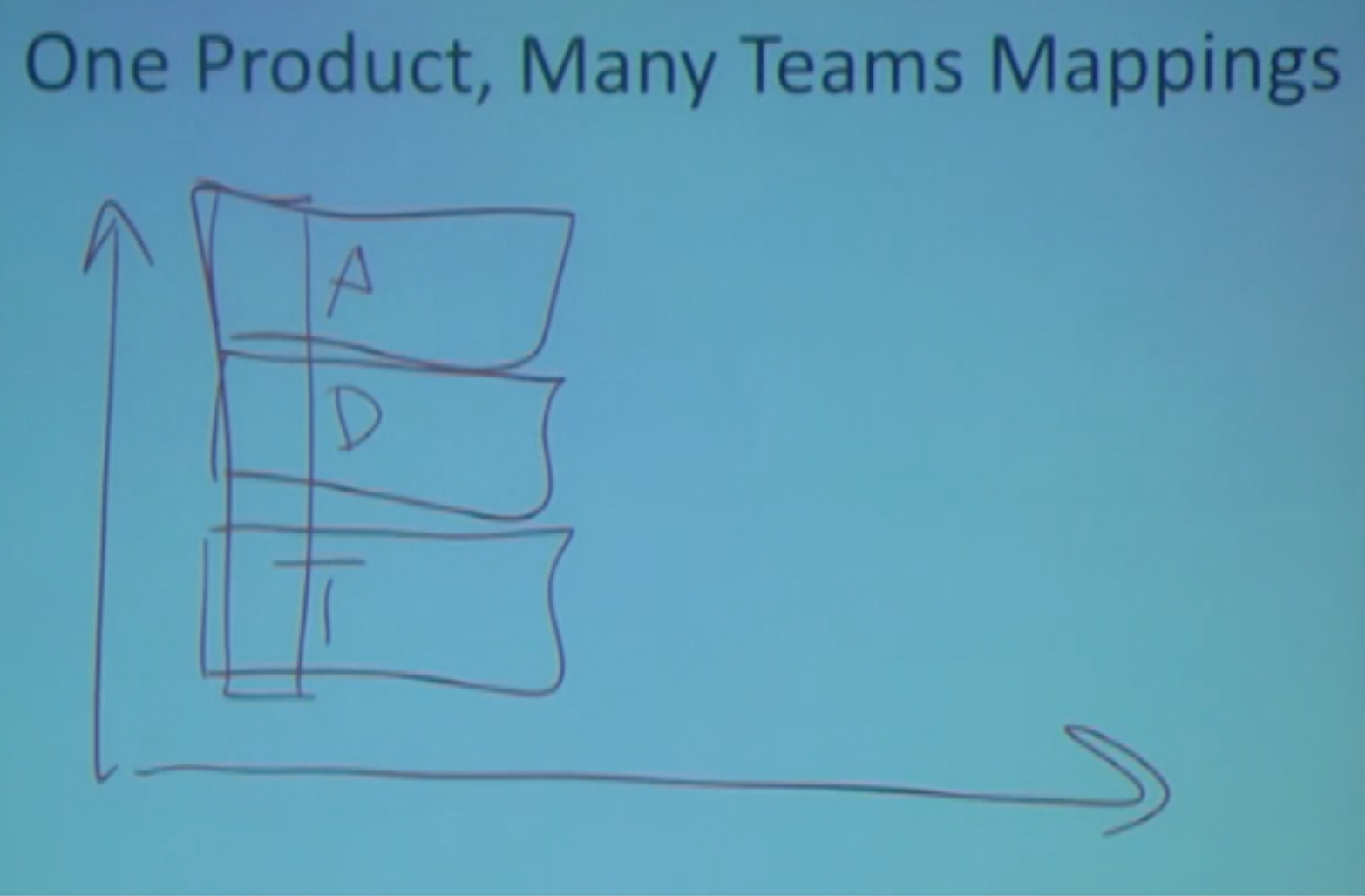

(1)

1 product

1 team

(1)

1 product

1 team

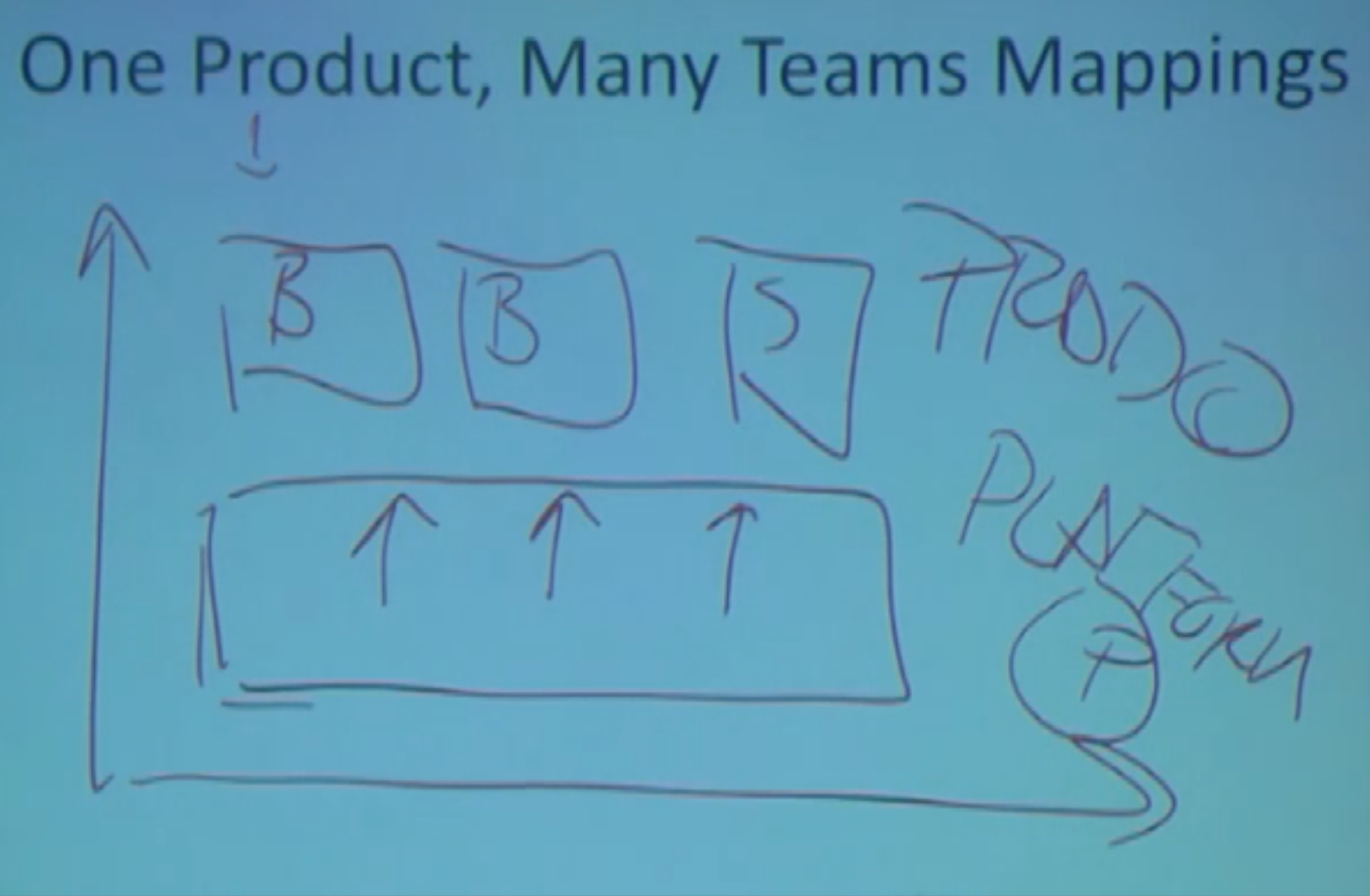

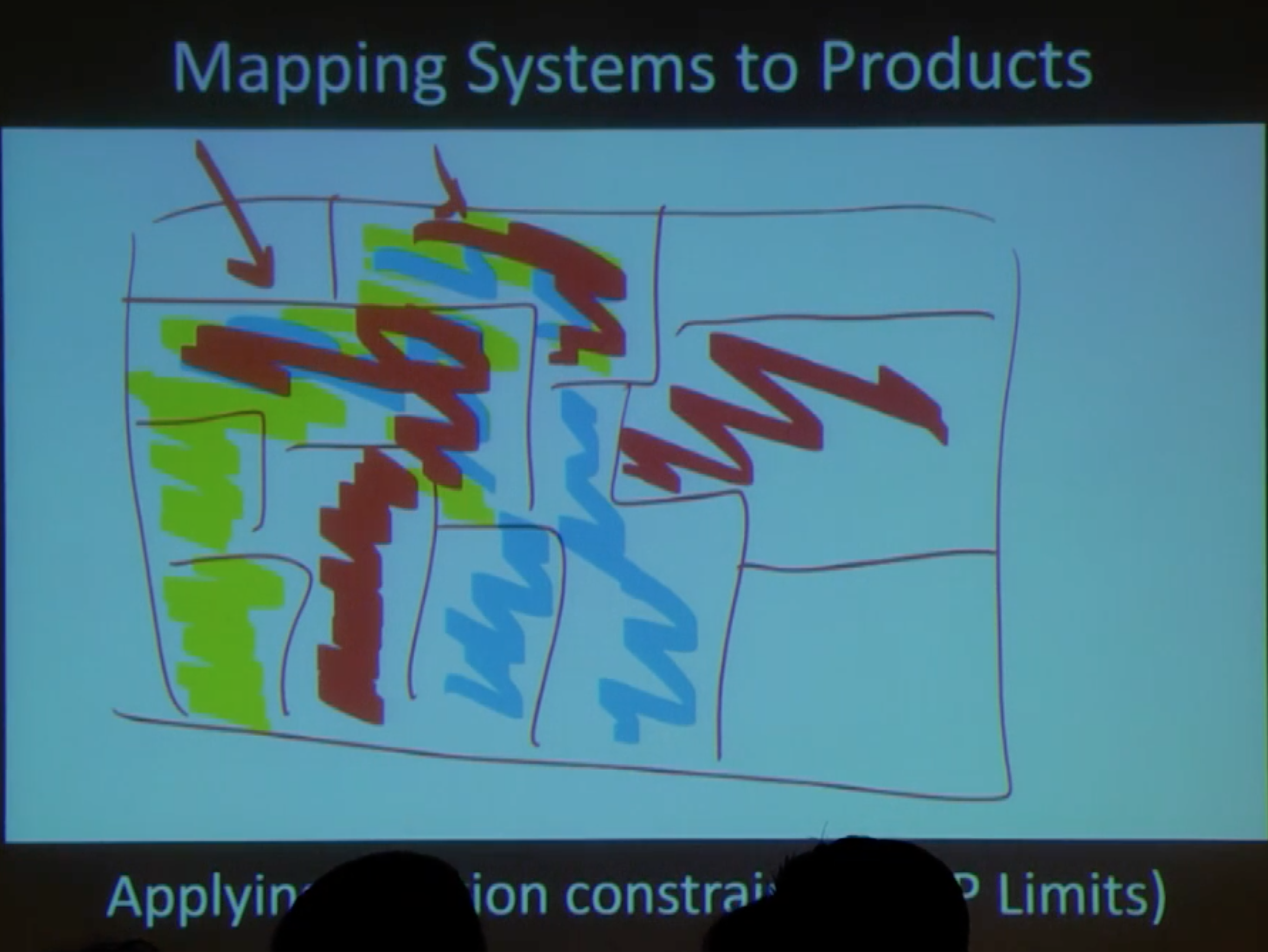

(2) 1 product many teams

(3) many products many teams

extra column: “valuable” or “validated”

“when you don’t pull things into progress, until you have some idea how you are going to validate them, you do less of the wrong thing”

extra column: “valuable” or “validated”

“when you don’t pull things into progress, until you have some idea how you are going to validate them, you do less of the wrong thing”

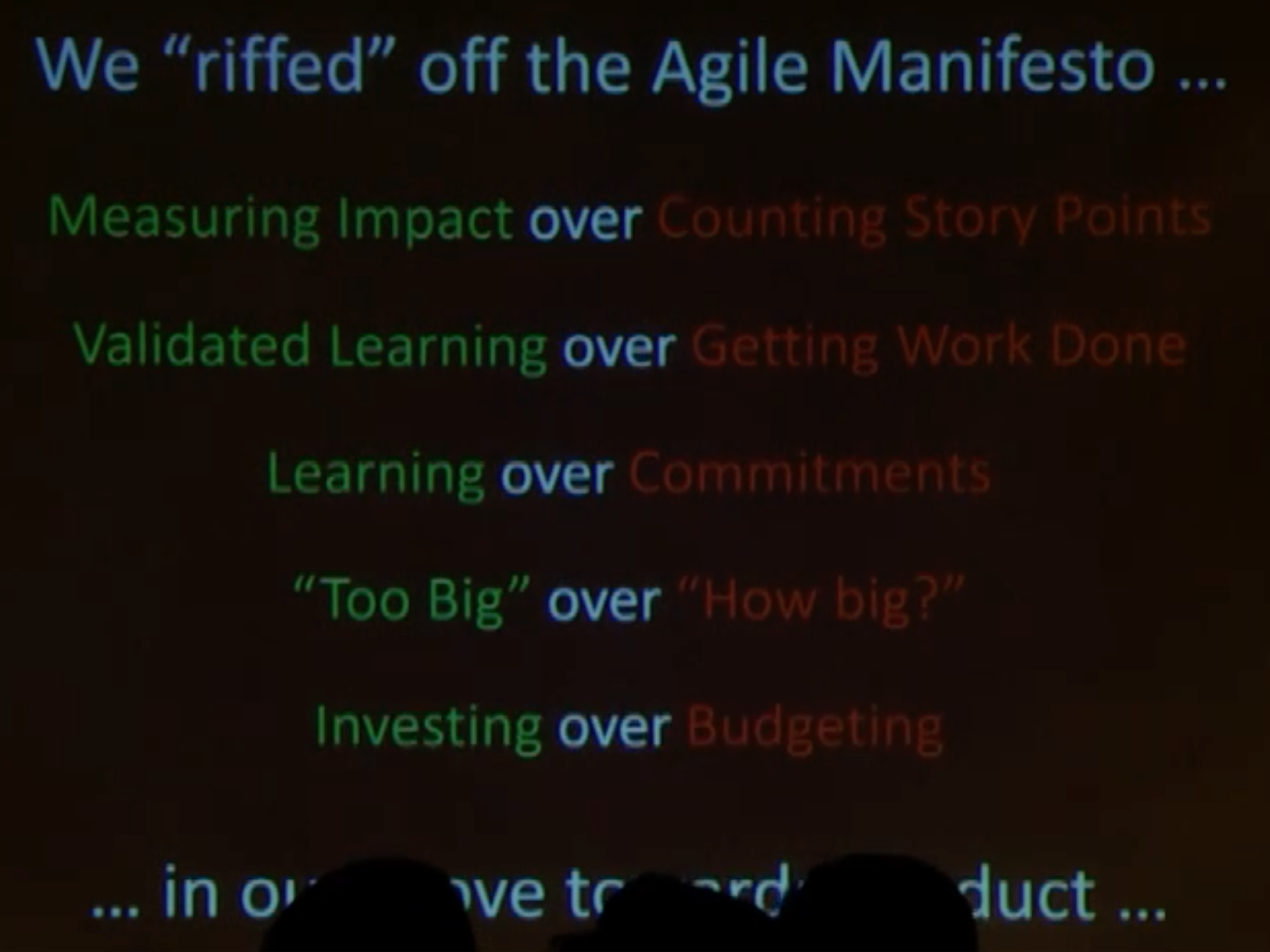

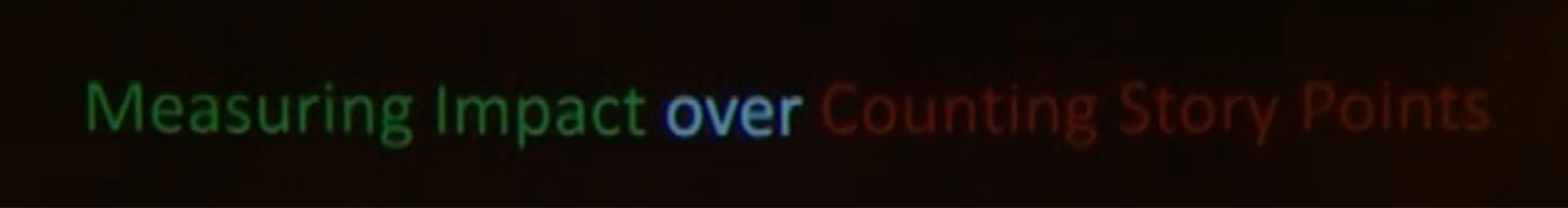

storypoints aren’t actually value delivered

you’re merely measuring progress

storypoints aren’t actually value delivered

you’re merely measuring progress

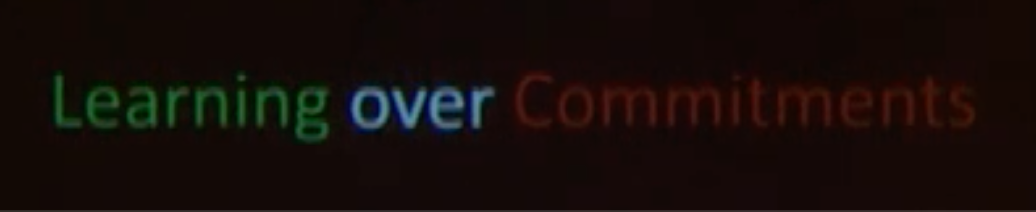

“you didn’t meet your commitments!”

fear

no learning

learn why you missed those commitments

“you didn’t meet your commitments!”

fear

no learning

learn why you missed those commitments

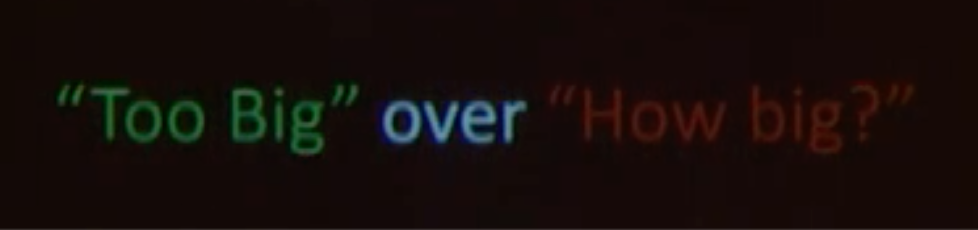

start breaking it down into smaller pieces

start breaking it down into smaller pieces

budgeting = certain

investing reflects uncertainty

budgeting = certain

investing reflects uncertainty

scaling agile -> often scale process

analyst

dev

test

analyst

dev

test

agile did well: cross functional

product & platform producer -> consumer

eg. retail: browse, buy, ship

customer does not care about technology

customer does not care about technology

CI: do you do something with it?

CI: do you do something with it?

if you’re getting the wrong stuff done faster, you should not celebrate that

if you’re getting the wrong stuff done faster, you should not celebrate that

groups of engineers, sitting together, telling customer stories

groups of engineers, sitting together, telling customer stories

not taking orders for a sprint

“more developers should act like doctors instead of waiters”

prototypes

interview customers (even harder for companies than building prototypes)

prototypes

interview customers (even harder for companies than building prototypes)

“the only code with no bugs, is no code”

intercom.io -> in-app communication not “chat” we start conversations with customers customers start conversations with us

“you can’t always talk to your customers”

FIT let’s take someone’s request and kill it CHAP create control & experiment group, let’s inject faults

“so confident in their delivery, they dare to inject damage into their system” “now they can focus exploration”

product validation over code proliferation

product validation over code proliferation

TDD: write test, then write the code -> if you write bad tests, you write bad code -> so tests speak to design

Acceptance TDD write test for the stories, then write stories -> even less code that was wrong

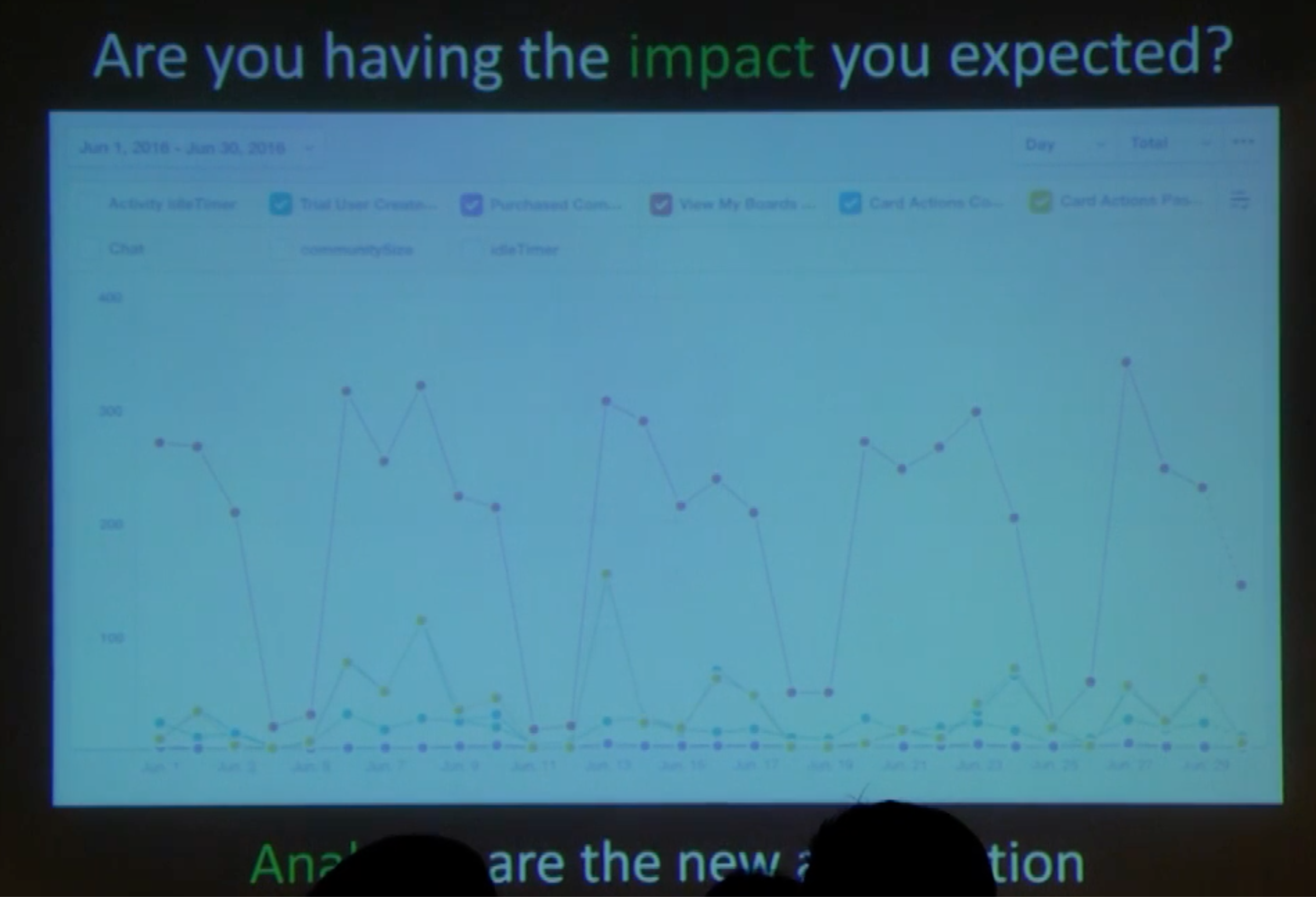

Impact

don’t start on a story, until you know how to measure the impact

Product developers: beyond getting it done and doing it well, is it actually a good idea?

Product developers: beyond getting it done and doing it well, is it actually a good idea?

====

MANY products, MANY teams

bottlenecks

bottlenecks

agile coach -> product coach

agile coach -> product coach

someone at intel

someone at intel

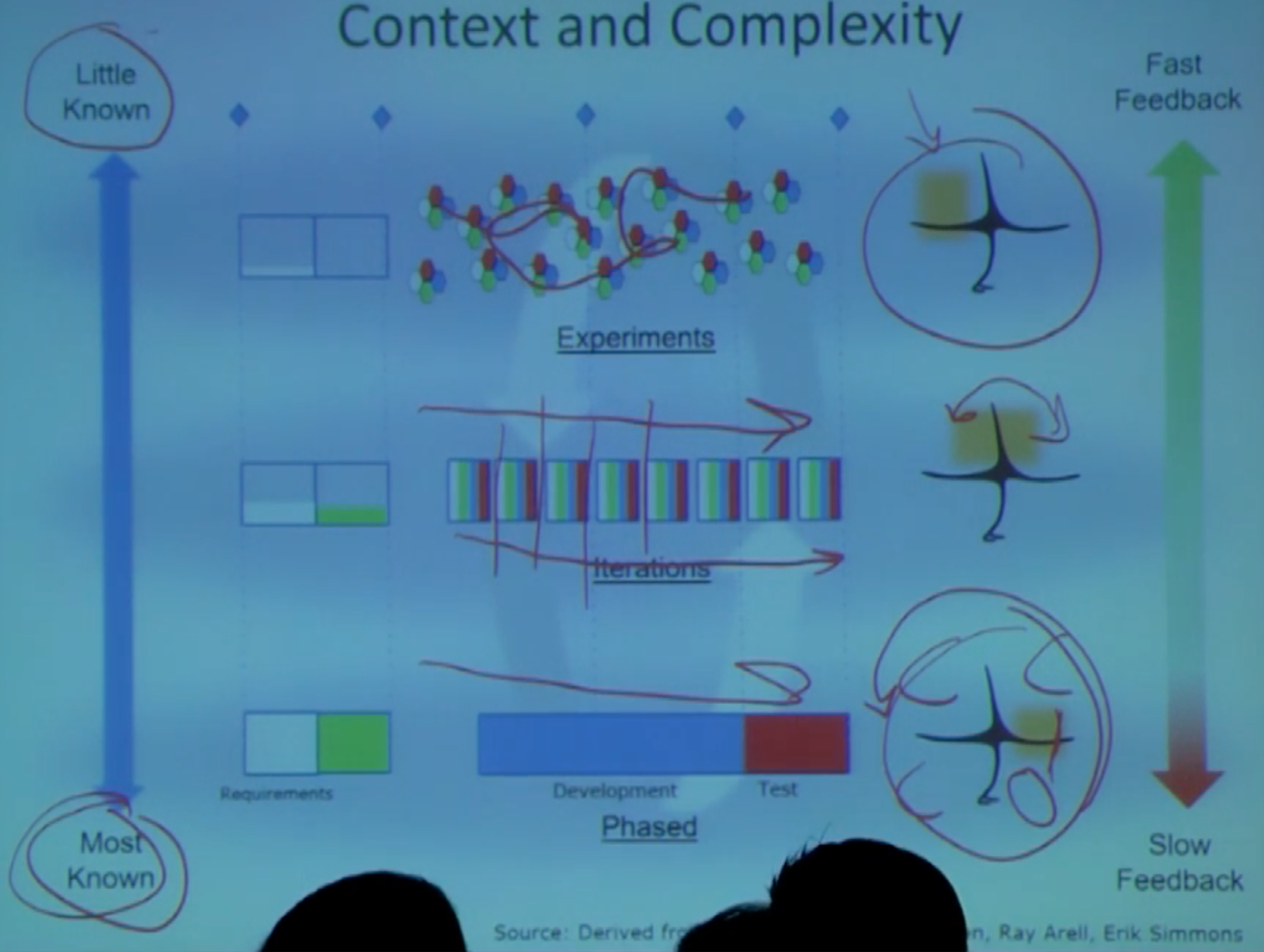

Conefyn Model (right of the slide) Obvious -> Complicated -> Complex -> chaotic

data science -> experiments, not construction -> experiments as first class citizens focus: learning

in some systems

you can’t manage complexity

you can try to constrain the complexity

in some systems

you can’t manage complexity

you can try to constrain the complexity

WHO do I learn from (“design targets” Alan Cooper) THINGS trying to do - examples -> “most obvious things they try to do”

“journey” -> where do we want to go

“journey” -> where do we want to go

primary dimension: what do we want to learn secondary dimension: how many stories can we get done in a sprint

Product team

UX, dev, architect, tester, project manager

UX, dev, architect, tester, project manager

UX tells the stories, because he has had the most facetime with users

open space topic: how do you do discovery in a distributed environment?

In YOUR team: How many people can tell a real customer story? -> how close are you to product, away from process

multiple perspectives - valuable - product (tester*) - useable - design (UX) - feasible - engineering (dev) * what do you choose to test?

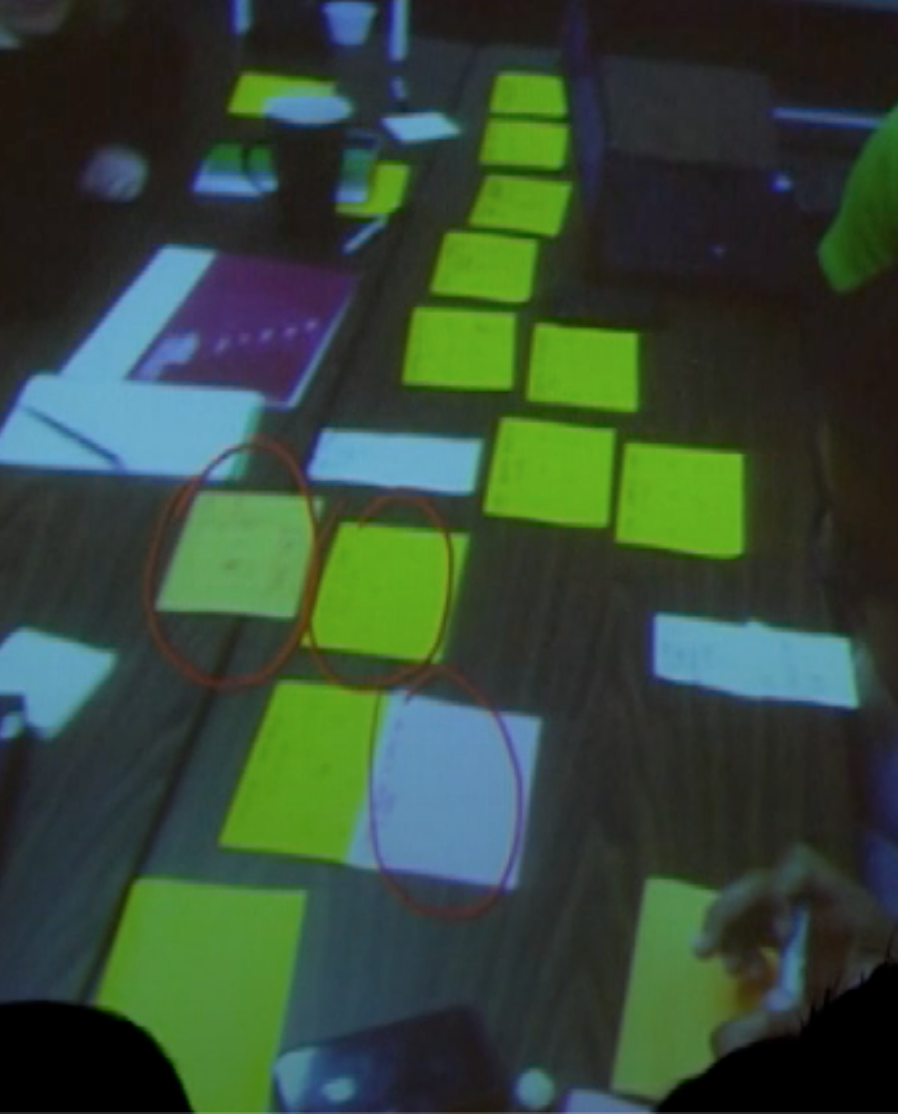

on the table (circled)

-> design - story - test

on the table (circled)

-> design - story - test

WHO is trying to do WHAT what might it look like how will we validate it with tests

test != click here test = statements of value test = how are you impacting them let’s add clarity with tests test = validation when is it done <-vs-> when is there value

MVP <> Minimal Viable Learning

Doing the right thing assume you’re getting stuff done, are you doing the right thing

Q&A

delivery cadence

1 sprint ahead of discovery cadence

“grooming the backlog”

planning -> off topic “should we be doing this?”

- interviews

- prototypes

1 sprint ahead of discovery cadence

“grooming the backlog”

planning -> off topic “should we be doing this?”

- interviews

- prototypes

what if this sprint, we need to do more discovery?

what if this sprint, we need to do more discovery?

-–

too much discovery up front? eg. customer - 1 year product cycle design sprint “no more than 10% investment in raw discovery” -> evolved into blended model

4 sprints, then +- 20% estimate

my fear: “discover their way into not delivering”